The Problem

For the final project in my Human Factors class, groups of students were asked to perform a comprehensive human factors assessment of a tool or product. We produced a write-up addressing current research and literature in the field, the results of usability tests we performed, and a proposed re-design based on research and testing.

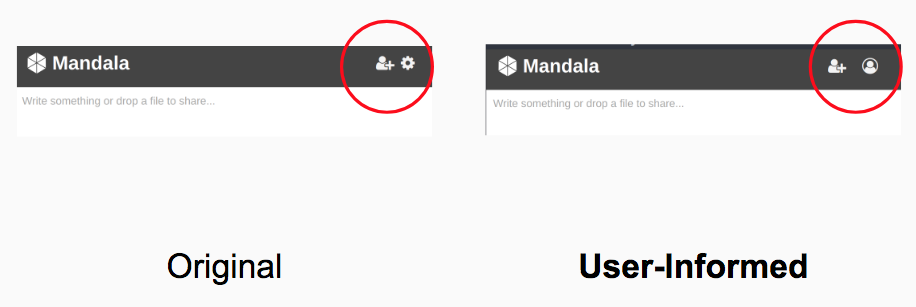

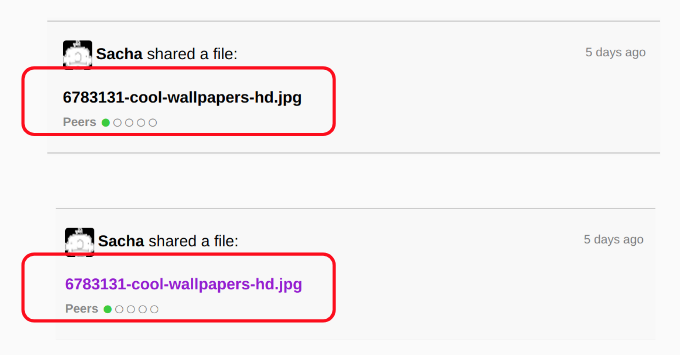

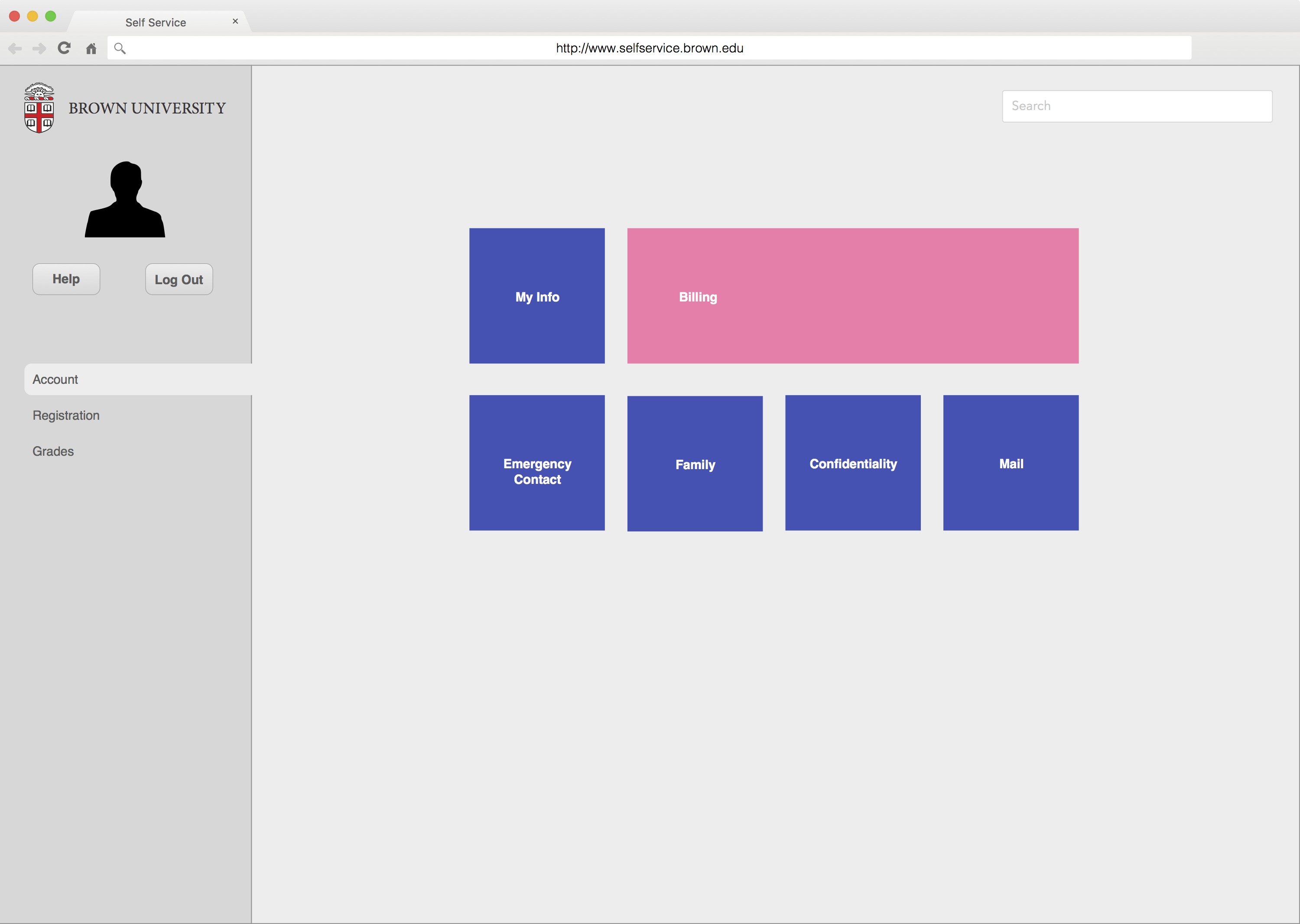

We chose to assess a piece of decentralized communication and file sharing software developed by one of our group members. This presented several challenges, namely user’s unfamiliarity with how decentralized (or peer-to-peer) software works and the latency associated with it. We decided to focus our evaluation on improving UI design, feedback, and the on-boarding experience.

The Tests

In class we discussed certain basic principals of usability testing, including Jakob Nielsen’s optimal tests with five users, which often reveal around 80% of issues. Before conducting our formal user tests, several new users did a blind evaluation of the software. Improvements to obvious problems were made and our user tests were designed around the more polished iteration of the software.

Users were given five tasks to complete, meant to target key features and potentially confusing interface elements. Tasks included changing the user’s display name, making a text-post, and downloading a file. Users ranged in age from 19-35 and varied in level of computer science understanding and tech savvy ability. During the test we took notes and recorded video. After completing all five tasks, each user was asked a series of structured questions and an opportunity to offer open-ended feedback.

The Re-Design

Next Project

Next Project

- Categories:

- Share:

Next Project

Next Project